The Era of 1-bit LLMs: A New Dawn for Powerful and Efficient Language Models

The Era of 1-bit LLMs: Unveiling the Power of 1.58 Bits in Language Models

Introduction:

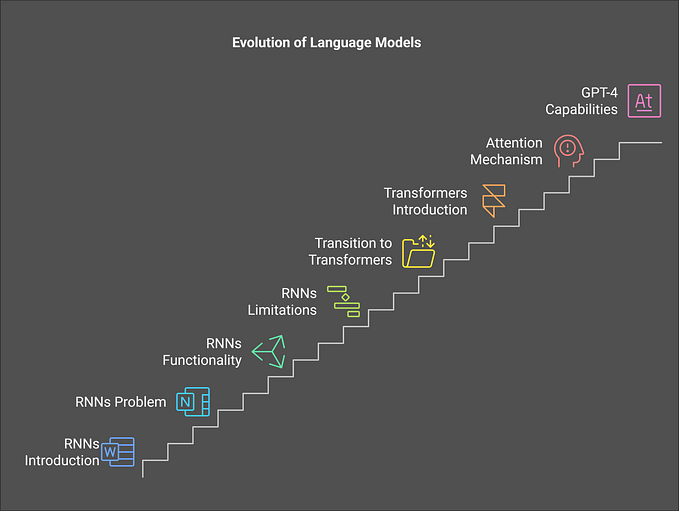

In the ever-evolving landscape of artificial intelligence, language models have played a pivotal role in shaping the way machines understand and generate human-like text. The latest leap in this domain takes us into the era of 1-bit Large Language Models (LLMs), where groundbreaking advancements have brought about a paradigm shift. These models, operating at an astonishing 1.58 bits, mark a significant stride towards more efficient and sustainable AI systems. The world of LLMs is constantly evolving, pushing the boundaries of what’s possible in terms of understanding and generating human language. However, these powerful models come at a cost, often requiring significant computing resources that limit their accessibility and practicality.

This is where the exciting concept of 1-bit LLMs comes in, offering a revolutionary approach to achieving high performance with minimal resources.

The Birth of 1-bit LLMs:

Traditionally, language models have been characterized by their immense size, requiring vast computational resources and energy consumption. However, the emergence of 1-bit LLMs challenges this norm by introducing a novel approach that combines compactness with unparalleled performance.

At the heart of this innovation lies the utilization of 1.58 bits, a seemingly minimalistic representation that belies its true potential. This shift represents a departure from the resource-intensive models of the past, allowing for more sustainable and eco-friendly AI solutions.

What are 1-bit LLMs?

Imagine an LLM where each parameter, the heart of its learning process, is represented not by the usual 16 or 32 bits, but by a single bit. This simplification, achieved through a technique called “ternarization,” leads to stunning efficiency gains.

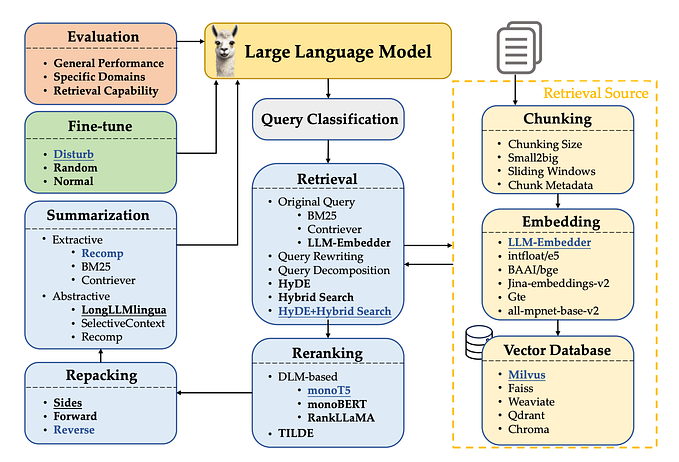

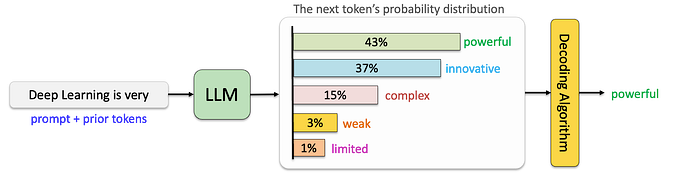

In the figure above, visualization of 1-bit LLMs, is shown, such as BitNet b1.58, which offers a Pareto solution aimed at diminishing inference costs, including latency, throughput, and energy consumption, without compromising model performance. The emergence of BitNet b1.58’s computational paradigm prompts the need to develop new hardware tailored to optimize 1-bit LLMs.

The Future of 1-Bit LLMs:

The potential of 1-bit LLMs extends far beyond simply improving efficiency. This technology opens doors to exciting possibilities:

Efficiency Redefined:

The move towards 1-bit LLMs underscores the importance of efficiency in the AI ecosystem. By achieving remarkable results with significantly fewer bits, these models demonstrate a newfound capacity for processing and comprehension. This efficiency not only reduces the strain on hardware but also opens doors to applications in resource-constrained environments, expanding the reach of AI to new frontiers. 1-bit LLMs are more CPU-friendly, making them ideal for edge devices. This could revolutionize how we interact with and utilize language models on our smartphones, wearables, and other edge devices. The unique nature of 1-bit LLMs opens doors for designing specialized hardware specifically optimized for their efficient execution, further pushing the boundaries of performance and efficiency.

Improved Accessibility:

The advent of 1-bit LLMs brings forth a democratization of AI, making advanced language models more accessible to a broader audience. The reduced computational requirements pave the way for smaller organizations, researchers, and developers with limited resources to harness the power of state-of-the-art language models. This inclusivity fosters innovation across diverse domains and ensures that the benefits of AI are distributed more equitably. With their reduced resource requirements, 1-bit LLMs can be deployed on various platforms, democratizing access to their capabilities for a broader range of users and applications.

Environmental Sustainability:

One of the most compelling aspects of 1-bit LLMs is their positive impact on environmental sustainability. The reduced computational demand translates into lower energy consumption, aligning with the global push for greener technologies. As concerns about the environmental footprint of AI continue to grow, the adoption of 1-bit LLMs presents a promising solution that balances technological advancement with ecological responsibility.

Performance on par with full-precision models: Despite using just 1.58 bits per parameter (on average), BitNet b1.58 matches the performance of full-precision models in terms of both perplexity (a measure of language understanding) and end-task performance (e.g., machine translation, question answering).

Significantly reduced resource consumption: Compared to full-precision models, BitNet b1.58 requires significantly less memory, reduces latency, and consumes less energy. This translates to faster processing, lower costs, and a smaller environmental footprint.

A new paradigm for LLM development: BitNet b1.58 establishes a new scaling law and training recipe for future LLMs. This paves the way for developing powerful and efficient models that can be deployed on a wider range of devices, from powerful servers to resource-constrained edge devices and mobile phones.

Challenges and Future Prospects:

While the era of 1-bit LLMs brings forth a multitude of advantages, it is not without challenges. Fine-tuning models to operate effectively with such limited bits poses unique hurdles, requiring ongoing research and development efforts. Furthermore, the ethical considerations surrounding the use of AI persist, necessitating a responsible and transparent approach to deployment.

Looking ahead, the prospects for 1-bit LLMs are exciting. Continued research will likely lead to refinements and optimizations, pushing the boundaries of what is achievable with minimal bit representations. As these models become more ingrained in our technological landscape, their impact on various industries, from healthcare to finance, promises to be transformative.

Conclusion:

The era of 1-bit LLMs marks a revolutionary chapter in the evolution of language models. Operating at a mere 1.58 bits, these models redefine efficiency, accessibility, and environmental sustainability in the realm of artificial intelligence. As we navigate this exciting frontier, the promise of more inclusive, eco-friendly, and powerful AI systems beckons, shaping a future where cutting-edge technology coexists harmoniously with the well-being of our planet and its inhabitants. The era of 1-bit LLMs marks a significant advancement in the field of language models. By offering unparalleled efficiency while maintaining excellent performance, 1-bit LLMs have the potential to reshape how we interact with language and unlock a new era of possibilities in various domains.

Reference: The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits https://arxiv.org/abs/2402.17764