Mastering GCP Data Engineering: Designing Scalable Data Processing Systems

In this blog, we dive deep into Domain 1: Designing Data Processing Systems, a core pillar of the GCP Data Engineer Professional Certification. This domain equips you with the knowledge to architect scalable, efficient, and cost-effective data solutions. By mastering this domain, you’ll lay the foundation for solving real-world challenges in data engineering.

Objectives of This Blog

By the end of this blog, you’ll learn:

- The importance of designing scalable data processing systems.

- Key concepts and considerations when building data processing systems.

- GCP services that play a crucial role in this domain.

- A practical, step-by-step hands-on example to solidify your understanding.

- Common pitfalls to avoid when designing data systems.

Why Designing Data Processing Systems Matters

In today’s data-driven world, businesses rely on well-designed systems to:

- Handle growing data volumes seamlessly.

- Process data efficiently, both in real-time and batch modes.

- Optimize costs without compromising performance.

- Ensure scalability, fault tolerance, and reliability.

Let’s consider a simple analogy: Imagine building a highway for a growing city. If designed poorly, traffic will choke, repairs will be frequent, and costs will skyrocket. Similarly, poorly designed data systems struggle under increasing workloads, leading to inefficiencies and outages.

Designing these systems correctly from the start can save businesses time, money, and resources.

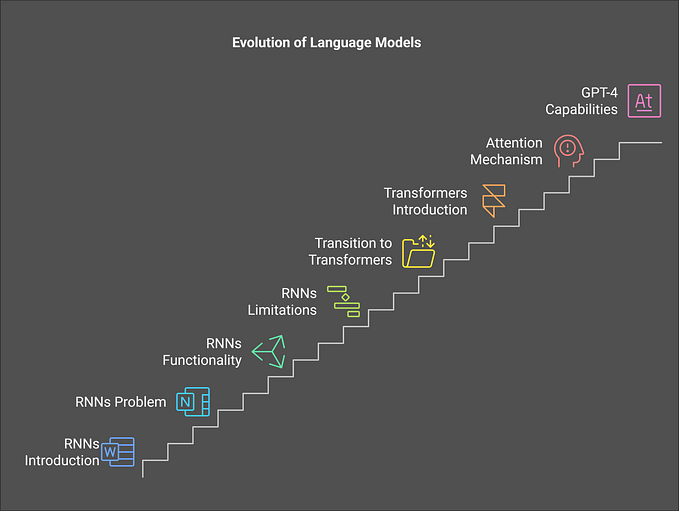

Figure 1 illustrates the key benefits of designing scalable data systems, including scalability, cost optimization, reliability, enhanced performance, and fault tolerance. These elements collectively ensure efficient and resilient data processing systems.

Key Concepts in Designing Data Processing Systems

1. Choosing the Right Storage Solution

Your choice of storage determines the efficiency, scalability, and cost-effectiveness of your system.

- Structured Data: Use BigQuery for analytical workloads involving structured data.

For example, sales transaction data.

- Semi-Structured Data: Opt for BigQuery or Cloud Storage, depending on query requirements. JSON logs fit well here.

- Unstructured Data: Use Cloud Storage for raw files like images, videos, or backups.

2. Batch vs. Streaming Data Processing

- Batch Processing: Ideal for periodic data loads where latency isn’t critical.

Example: Generating a daily sales report.

- Streaming Processing: Necessary for real-time insights.

Example: Processing sensor data to detect equipment failures as they occur.

3. Scalability and Fault Tolerance

- Scalability: Design systems to handle increasing workloads without degrading performance.

For example, use Bigtable for time-series data or high-throughput NoSQL use cases.

- Fault Tolerance: Ensure systems recover gracefully from failures. Services like Pub/Sub guarantee message durability and at-least-once delivery.

4. Workflow Automation

Automate repetitive tasks using Cloud Composer (Apache Airflow) to schedule, monitor, and manage workflows.

5. Cost Optimization

- Use appropriate storage tiers, such as Coldline Storage for infrequently accessed data.

- Optimize query execution with BigQuery Reservations or on-demand pricing for workloads.

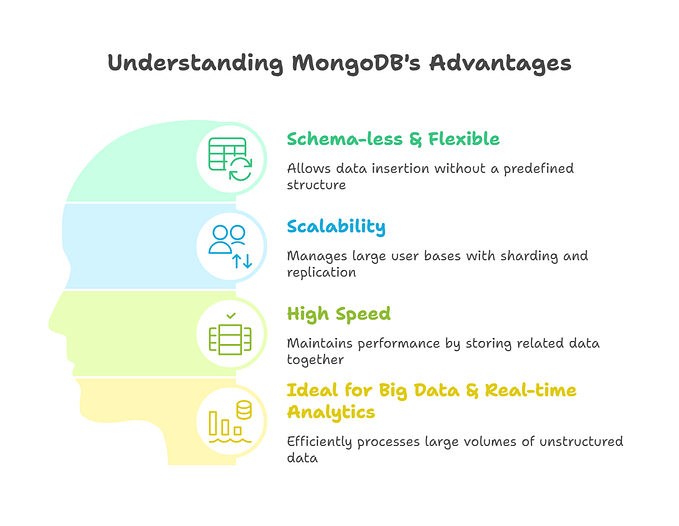

Figure 2 outlines the essential components of designing data processing systems. It emphasizes key considerations such as storage solutions, scalability and fault tolerance, cost optimization, processing methods (batch vs. streaming), and workflow automation. Each element contributes to building efficient, reliable, and cost-effective data systems.

Key GCP Services for Designing Data Processing Systems

Let’s explore the key tools and services you’ll use:

- BigQuery: A serverless, highly scalable data warehouse that supports SQL-based analytics for massive datasets.

- Cloud Storage: Durable object storage for raw and unstructured data, with multiple storage classes for cost management.

- Bigtable: A NoSQL database designed for high-throughput workloads such as time-series data.

- Pub/Sub: A messaging service for real-time ingestion and communication between systems.

- Dataflow: A fully managed service for creating batch and streaming pipelines using Apache Beam.

- Cloud Composer: An orchestration tool for automating and scheduling workflows.

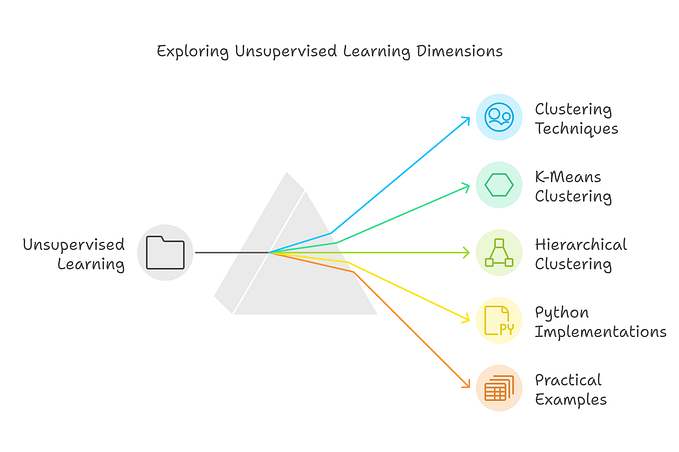

Figure 3 highlights essential GCP services for data processing, including BigQuery for analytics, Bigtable for NoSQL workloads, Cloud Storage for raw data, Pub/Sub for messaging, Dataflow for pipelines, and Cloud Composer for workflow orchestration.

Real-World Applications

Use Case: IoT Sensor Data Processing

Scenario: An energy company monitors thousands of IoT sensors in real time to track equipment health and predict failures.

Requirements:

- Ingest Data: Collect real-time sensor readings.

- Process Data: Filter and enrich data to detect anomalies.

- Store Data: Archive raw data for compliance and analytics.

- Visualize Insights: Provide real-time dashboards for decision-making.

Solution Architecture:

- Use Pub/Sub to ingest real-time IoT sensor data.

- Use Dataflow to process the data by filtering anomalies and adding metadata.

- Store raw data in Cloud Storage for archival and processed data in BigQuery for analytics.

- Use Data Studio to create dashboards visualizing equipment health metrics.

Hands-On Example: Build a Streaming Data Pipeline

Objective:

Create a pipeline that ingests real-time clickstream data, processes it to enrich metadata, and stores it for analytics.

Step-by-Step Guide:

Set Up Pub/Sub:

- Create a Pub/Sub topic named

clickstream_topic. - Create a subscription named

clickstream_subto receive messages.

Simulate Data:

- Write a Python script to simulate clickstream events and publish them to the

clickstream_topic.

from google.cloud import pubsub_v1

import json

import time

publisher = pubsub_v1.PublisherClient()

topic_path = 'projects/YOUR_PROJECT_ID/topics/clickstream_topic'

def publish_event():

event = {

'user_id': '1234',

'timestamp': time.time(),

'page': '/home'

}

publisher.publish(topic_path, json.dumps(event).encode('utf-8'))

for _ in range(100):

publish_event()

time.sleep(1)Create a Dataflow Job:

- Use a template or Python Apache Beam pipeline to process the data.

- Filter events and add metadata before writing to BigQuery.

Set Up BigQuery:

- Create a table to store the processed data.

- Run SQL queries to analyze user behavior.

Visualize Insights:

- Connect BigQuery to Data Studio and build a dashboard showing user activity trends.

Common Pitfalls and How to Avoid Them

Choosing the Wrong Storage Solution:

- Mistake: Using BigQuery for archival storage.

- Solution: Use Cloud Storage for backups and archival data.

Underestimating Scalability Needs:

- Mistake: Designing systems without considering future growth.

- Solution: Use services like Bigtable and Dataflow, which scale automatically.

Ignoring Cost Optimization:

- Mistake: Using high-performance tiers unnecessarily.

- Solution: Analyze usage patterns and select appropriate service tiers.

Visualizing the Architecture

Here is a sample architecture diagram to illustrate the flow of a streaming data pipeline:

- Ingestion: Pub/Sub collects clickstream data.

- Processing: Dataflow transforms and enriches the data.

- Storage: Processed data is stored in BigQuery for analytics.

- Visualization: Data Studio presents insights via dashboards.

Conclusion

Designing data processing systems is the cornerstone of efficient data engineering. By mastering this domain, you’ll be equipped to build scalable, cost-effective, and reliable solutions that address real-world challenges.

Focus on hands-on practice with GCP services like BigQuery, Dataflow, and Pub/Sub to solidify your knowledge. Remember, a well-designed system not only meets current needs but is also future-proof.

Stay tuned for the next blog in this series, where we’ll explore Building and Operationalizing Data Processing Systems in detail. Let’s continue mastering GCP Data Engineering together!