Mastering GCP Data Engineering: Building and Operationalizing Data Processing Systems

In this blog, we’ll explore Domain 2: Building and Operationalizing Data Processing Systems, a vital area of expertise for the GCP Data Engineer Professional Certification. This domain focuses on the creation, automation, and maintenance of data workflows that power business insights and enable seamless scalability.

Let’s delve into the concepts, tools, and hands-on examples to help you master this critical domain.

Objectives of This Blog

By the end of this blog, you’ll learn:

- The importance of building and operationalizing robust data systems.

- Key concepts, including ETL/ELT workflows, automation, and high availability.

- GCP services essential for this domain.

- A hands-on project to implement a batch processing pipeline.

- Common pitfalls to avoid when operationalizing data systems.

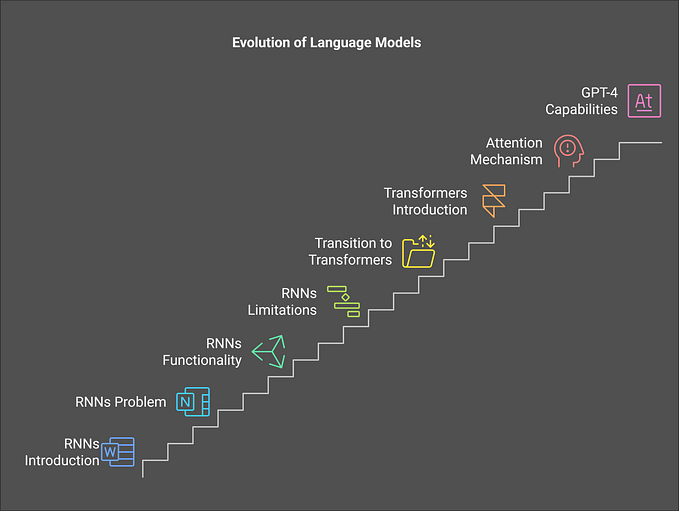

Figure 1 illustrates the focus areas of Domain 2: Building and Operationalizing Data Processing Systems in mastering GCP Data Engineering. The central theme is creating efficient, reliable systems.

Why Building and Operationalizing Data Processing Systems Matters

Modern businesses depend on automated, reliable data systems to:

- Handle diverse data processing needs (batch, real-time, and hybrid).

- Enable faster decision-making with automated workflows.

- Maintain system availability even during high traffic or failure scenarios.

For example, imagine an online retailer analyzing customer transactions daily to optimize pricing strategies. Without a robust, automated data processing system, such tasks would become error-prone and time-intensive, hindering business agility.

Example Analogy: Building a data processing system is like designing an assembly line in a factory. Each step must be automated, efficient, and reliable to ensure the final product (business insights) is delivered on time without manual intervention.

Key Concepts in Building and Operationalizing Data Systems

1. ETL vs. ELT Pipelines

- ETL (Extract, Transform, Load):

- Data is transformed before loading into the target system.

- Suitable for systems with limited transformation capabilities, e.g., relational databases.

- ELT (Extract, Load, Transform):

- Data is loaded first and transformed later within the target system.

- Ideal for modern systems like BigQuery that handle transformations efficiently.

Practical Example:

- In an ETL pipeline, sales data from multiple regions is cleaned and aggregated before being loaded into a data warehouse.

- In an ELT pipeline, raw sales data is loaded into BigQuery, where SQL transformations generate reports.

2. Workflow Automation

Automating workflows minimizes manual effort, reduces errors, and ensures timely execution of data processing tasks. GCP’s Cloud Composer (based on Apache Airflow) allows you to:

- Orchestrate multi-step workflows.

- Monitor and retry failed tasks.

- Schedule recurring jobs with dependencies.

Real-World Scenario: Automate a daily pipeline that pulls data from APIs, processes it with Dataflow, and stores results in BigQuery. Use Cloud Composer to ensure timely execution and alert on failures.

3. High Availability and Scalability

A reliable data system should:

- Scale automatically to handle traffic spikes.

- Include fault-tolerant designs to minimize downtime.

Example:

- Use Dataflow to dynamically scale processing jobs based on incoming data volume, ensuring real-time systems remain responsive.

4. Error Handling and Monitoring

Proactively monitor data workflows to:

- Detect anomalies.

- Trigger alerts for failures.

- Log detailed error messages for debugging.

Tools:

- Cloud Monitoring: Tracks metrics like CPU usage, latency, and error rates.

- Cloud Logging: Provides detailed logs for debugging failed workflows.

- Cloud Composer Alerts: Notify engineers when a task fails.

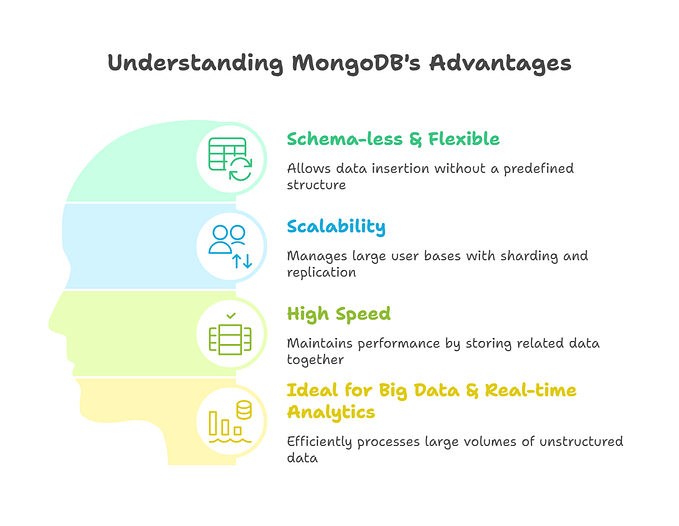

Figure 2 outlines the roadmap for building and operationalizing data processing systems. It emphasizes the key steps: identifying the need for data processing, selecting ETL or ELT pipelines, automating workflows, ensuring high availability for scalability, and implementing robust error-handling mechanisms to maintain workflow efficiency.

Key GCP Services for Building and Operationalizing Data Systems

Let’s explore the key tools and services you’ll use:

- Dataflow: Fully managed service for batch and stream processing. Scales automatically and ensures fault tolerance.

- Dataproc: Managed Hadoop/Spark service for distributed processing of large datasets.

- Cloud Composer: Workflow orchestration service built on Apache Airflow.

- BigQuery: Serverless data warehouse for analytics and transformations.

- Cloud Storage: Object storage for raw or intermediate data.

- Cloud Monitoring and Logging: Tools for tracking system performance and identifying issues.

Figure 3: This illustration highlights essential Google Cloud Platform (GCP) tools for managing data systems efficiently.

Real-World Applications

Use Case: Daily Transaction Data Processing

Scenario: A financial institution processes millions of transactions daily to detect fraud and generate compliance reports.

Requirements:

- Ingest Data: Collect transaction data from multiple sources.

- Transform Data: Cleanse and enrich data for fraud detection algorithms.

- Store Data: Save processed data for analytical queries.

- Automate Workflows: Schedule daily ETL tasks.

Solution Architecture:

- Use Cloud Storage to store raw transaction data.

- Process data using Dataflow for transformations and fraud detection.

- Load processed data into BigQuery for analytics.

- Use Cloud Composer to automate and monitor the workflow.

Hands-On Example: Automate a Batch ETL Workflow

Objective:

Create an ETL pipeline to process daily sales data, load it into BigQuery, and schedule the job using Cloud Composer.

Step-by-Step Guide:

Upload Raw Data to Cloud Storage:

- Create a bucket and upload a CSV file containing sales data (e.g.,

daily_sales.csv).

Set Up BigQuery:

- Create a dataset named

sales_analysis. - Create a table named

daily_saleswith columns likedate,product_id,quantity, andrevenue.

Build a Dataflow Pipeline:

- Use Apache Beam (Python or Java) to read data from Cloud Storage, transform it, and load it into BigQuery.

import apache_beam as beam

from apache_beam.options.pipeline_options import PipelineOptions

class TransformData(beam.DoFn):

def process(self, record):

record['revenue'] = float(record['quantity']) * float(record['price'])

yield record

options = PipelineOptions(

runner='DataflowRunner',

project='YOUR_PROJECT_ID',

temp_location='gs://YOUR_BUCKET/temp'

)

with beam.Pipeline(options=options) as p:

(p

| 'Read from GCS' >> beam.io.ReadFromText('gs://YOUR_BUCKET/daily_sales.csv')

| 'Parse JSON' >> beam.Map(json.loads)

| 'Transform Data' >> beam.ParDo(TransformData())

| 'Write to BigQuery' >> beam.io.WriteToBigQuery(

'YOUR_PROJECT_ID:sales_analysis.daily_sales',

schema='SCHEMA_AUTODETECT'))Set Up Cloud Composer:

- Create a DAG (Directed Acyclic Graph) in Cloud Composer to schedule the Dataflow job.

- Define task dependencies and retry policies in Python.

from airflow import DAG

from airflow.operators.bash import BashOperator

from datetime import datetime

default_args = {

'start_date': datetime(2023, 1, 1),

'retries': 3

}

with DAG('daily_sales_etl', default_args=default_args, schedule_interval='@daily') as dag:

start_etl = BashOperator(

task_id='start_etl',

bash_command='python dataflow_pipeline.py'

)

start_etlMonitor the Workflow:

- Use Cloud Monitoring and Logging to track job status and troubleshoot errors.

Common Pitfalls and How to Avoid Them

- Inefficient Dataflow Pipelines:

- Mistake: Poorly written transformations leading to high costs.

- Solution: Optimize pipelines by filtering and aggregating data early.

2. Missing Retry Logic:

- Mistake: Lack of retry policies in workflows.

- Solution: Use Cloud Composer to define retries for failed tasks.

3. Overlooking Monitoring:

- Mistake: Not setting up alerts for workflow failures.

- Solution: Use Cloud Monitoring to configure custom alerts.

Conclusion

Building and operationalizing data processing systems is a critical skill for data engineers. By leveraging tools like Dataflow, Cloud Composer, and BigQuery, you can automate workflows, handle large-scale data processing, and ensure system reliability.

Stay hands-on with GCP services to master this domain. In the next blog, we’ll dive into Operationalizing Machine Learning Models to unlock advanced insights from your data.

Let’s continue mastering GCP Data Engineering together!